Changsheng Wang

Room 3210

428 S Shaw LN

East Lansing, Michigan

United States of America

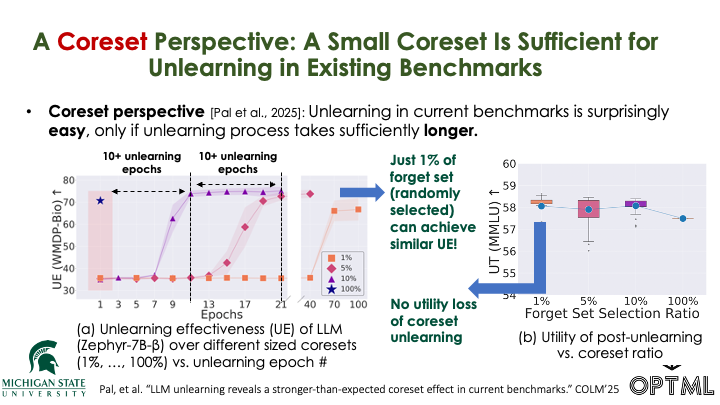

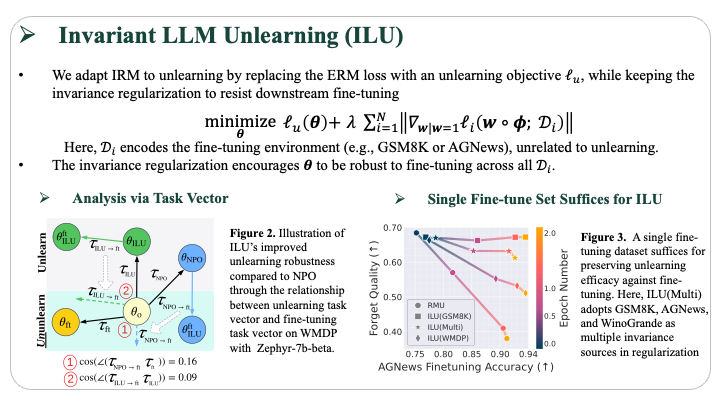

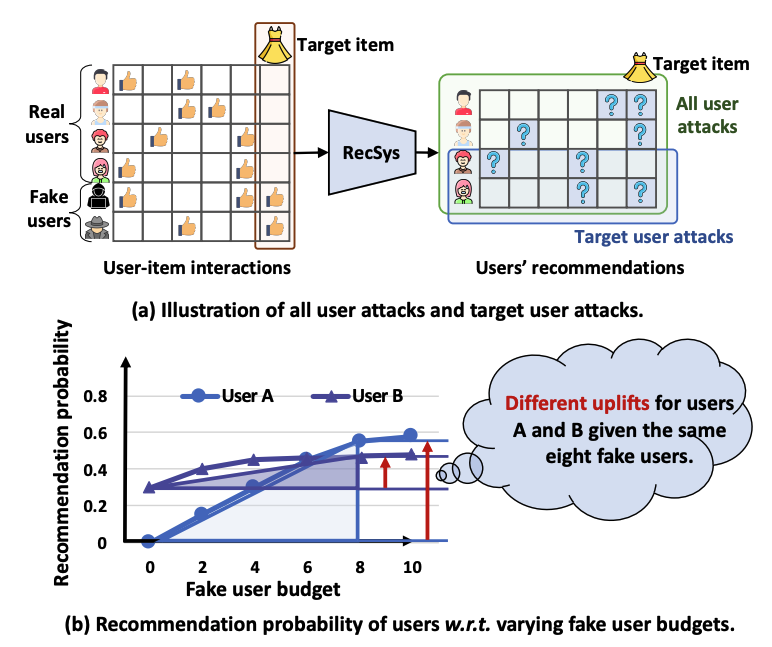

Changsheng Wang (王昌盛) is a second-year Ph.D. student in Computer Science at Michigan State University, working in the OPTML Group under the supervision of Prof. Sijia Liu. His research centers on trustworthy AI and AI safety, with a focus on large language models (LLMs). He is particularly interested in building machine learning systems that are robust, efficient, and secure in adversarial or real-world settings. Before joining MSU, Changsheng received his B.S. in Data Science and Big Data Technology from the University of Science and Technology of China (USTC), where he was advised by Prof. Xiangnan He. He has completed research internships at Intel, and collaborated with IBM Research on trustworthy and safe AI.

Research Keywords: Machine Unlearning, AI Safety, Adversarial Training, Fine-Tuning Efficiency, Watermarking, LLM Robustness, Diffusion Models, Recommender System Security, Optimization.

Looking for Collaboration!

I am currently seeking a 2026 Summer Internship position in industrial or academic research labs related to AI safety, LLM robustness, or foundation model alignment. Feel free to reach out, befriend me on Wechat, or connect with me on LinkedIn.

News

| Sep 18, 2025 |

|

|---|---|

| Aug 20, 2025 |

|

| Aug 10, 2025 |

|

| Jul 7, 2025 |

|

| May 19, 2025 |

|

| Apr 17, 2025 |

|

| Dec 1, 2024 |

|